FAQ - Airbridge Reports Overview

The metric data in the Airbridge reports are the last updated data depending on the refresh cycle of each data type collected by Airbridge. The refresh cycle of each data type is as follows.

Data collected through the Airbridge SDK: Near real-time

Data imported through APIs from ad channels, such as cost data: Every 4 hours

Data imported from third-party data storage: Every 4 hours

SKAN data: Every 24 hours

For more details on the metrics and their refresh cycles, refer to this article.

If you have properly installed the Airbridge SDK, Airbridge tracks the Install(App) event without any additional event settings. However, the Install (App) event is aggregated only when the app is opened after the install. If the app is installed but not opened, it will not be aggregated as an Install (App) event.

The channel information of data without any channel parameter value is displayed as $$default$$. When creating tracking links using the Airbridge dashboard, the channel parameter is always added. However, when creating tracking links through different methods, the channel parameter may be left out. In such cases, the channel name is displayed as $$default$$.

Tracking link with channel parameter:

https://abr.ge/@your_app_name/ad_channel?campaign=abcTracking link without channel parameter:

https://abr.ge/@your_app_name?campaign=abc

For more details on tracking links and parameters, refer to this article.

google.adwords and google are different channels.

google.adwords is the display name of Google Ads in Airbridge. Google Ads data is aggregated under google.adwords as channel name. The display names of the ad channels integrated with Airbridge can be found here.

google is not the display name of Google Ads in Airbridge. It is highly likely that a Custom Channel has been given the name google when creating a tracking link for a Custom Channel.

The data in the Actuals Report may be updated due to the following reasons.

Data correction to fix missing data issues

Varying query results based on Date Option settings

Read on for more details.

The major causes for missing data issues are as follows.

Unstable user network environments: This can lead to delays in event aggregation.

App backgrounded or closed shortly after event occurrence: The event may be sent to the Airbridge server before the app is sent to the background or closed.

Airbridge performs daily data correction at 9:00 PM UTC to prevent missing data. This correction process adds any missing events from the previous two days (24 hours). As a result, some metrics in the Actuals Report may increase due to data correction.

Therefore, most data will stay unchanged for the query data, while data from two days prior (D-2) may be adjusted.

Example

Let's say you are querying data in the Actuals Report on January 3rd, 11:00 PM (UTC).

The data from January 1, 3:00 PM (UTC) to January 2, 11:00 PM (UTC) is not finalized yet.

The data collected before January 1, 3:00 PM is finalized.

The time it takes for the Actuals Report query results to be finalized can vary depending on the Date Option settings. If the Date Option is set to the Target Event Date or Touchpoint Date, the event will be aggregated on the date the Target Event occurred or the Touchpoint occurred, even if it happened in the past.

As a result, the Actuals Report metrics may change based on the query time until the attribution window ends.

Example

Let's say the Date Option is set to Touchpoint Date in the Actuals Report you queried today, which is February 10.

If the report shows 10 installs for February 1, it means that the touchpoints that occurred on February 1 contributed to 10 installs.

The report may show 11 installs for February 1 on the next day, February 11, because if the attribution window is still open, more installs can be collected as a result of the touchpoints that occurred on February 1.

The identifiers used to determine whether an event is the first event differ by GroupBys. For more details, refer to this article.

GroupBy | Identifier used | Event types applicable |

|---|---|---|

Is First Event per Device ID | Device ID | App events |

Is First Event per User ID | User ID | App and web events |

When selecting Is First Event per User ID as GroupBy, the first event is aggregated for the platform based on User ID. For example, if Event A first occurred in the web environment and the same event occurred in the app environment, the event that occurred in the web environment is aggregated as the first event.

Certain metrics in the Actuals Report are categorized as unattributed and are displayed as 0 due to the privacy policy of some ad channels depending on the Date Option settings and specific conditions. Touchpoint data such as Campaign, Ad Group, and Ad Creative won't be available as well.

Refer to the following table to understand which metrics may be categorized as unattributed depending on the Date Option settings.

Date Option |

Metrics that can be categorized as unattributed |

|---|---|

Event Date | Metrics that include 'Users' in their names |

Target Event Date | All metrics except for Target Events |

Touchpoint Date | All metrics |

When metric data that may be categorized as unattributed falls into the conditions below, it is displayed as 0.

Date range: When the attribution was conducted earlier than 180 days from today based on UTC, the metric changes to 0. There may be some delay in time due to the timezone difference.

Channel: When the winning touchpoint is from Meta ads (facebook.business), Google Ads (google.adwords), or TikTok For Business (tiktok).

Touchpoint type: When the Touchpoint Generation Type of the winning touchpoint is Self-attributing Network. When the winning touchpoint is from TikTok For Business, and the Touchpoint Generation Type is Tracking Link, the metric is also displayed as 0.

Let's say, based on UTC, 10 Order Complete (App) events attributed to Meta ads were collected 9 months ago from today. These events fall into the conditions described above and, therefore, can be categorized as unattributed depending on the Date Option settings.

When setting Order Complete (App) as a metric, the following data will be displayed depending on the Date Option.

Date Option |

Metric Data |

Description |

|---|---|---|

Event Date | 10 | Only metrics that include 'Users' in their names are displayed as 0 |

Target Event Date | 0 | All metrics except for Target Events are displayed as 0 |

Touchpoint Date | 0 | All metrics are displayed as 0 |

When querying events or touchpoints attributed to Meta ads, Google Ads, or TikTok For Business 180 days earlier than today based on UTC with the Date Option set to Event Date, the number of unattributed users may be larger than the number of unattributed events.

This is because the metrics that include 'Users' in their names are categorized as unattributed when the Date Option is set to Event Date due to the privacy policies of Meta ads, Google Ads, and TikTok For Business.

Let's say 20 users performed the Order Complete (App) event 1 time 9 months ago based on UTC. 10 of the events were attributed to Google Ads, and the other 10 were unattributed. If you change the Date Option to Event Date, the number of users who performed the Order Complete (App) that is unattributed will change as follows.

Metric |

When Date Option is NOT |

When Date Option is |

|---|---|---|

Number of users who performed the Order Complete (App) event that is attributed to Google | 10 | 0 |

Number of users who performed the Order Complete (App) that is unattributed | 10 | 20(10+10) |

Unattributed Order Complete (App) | 10 | 10 |

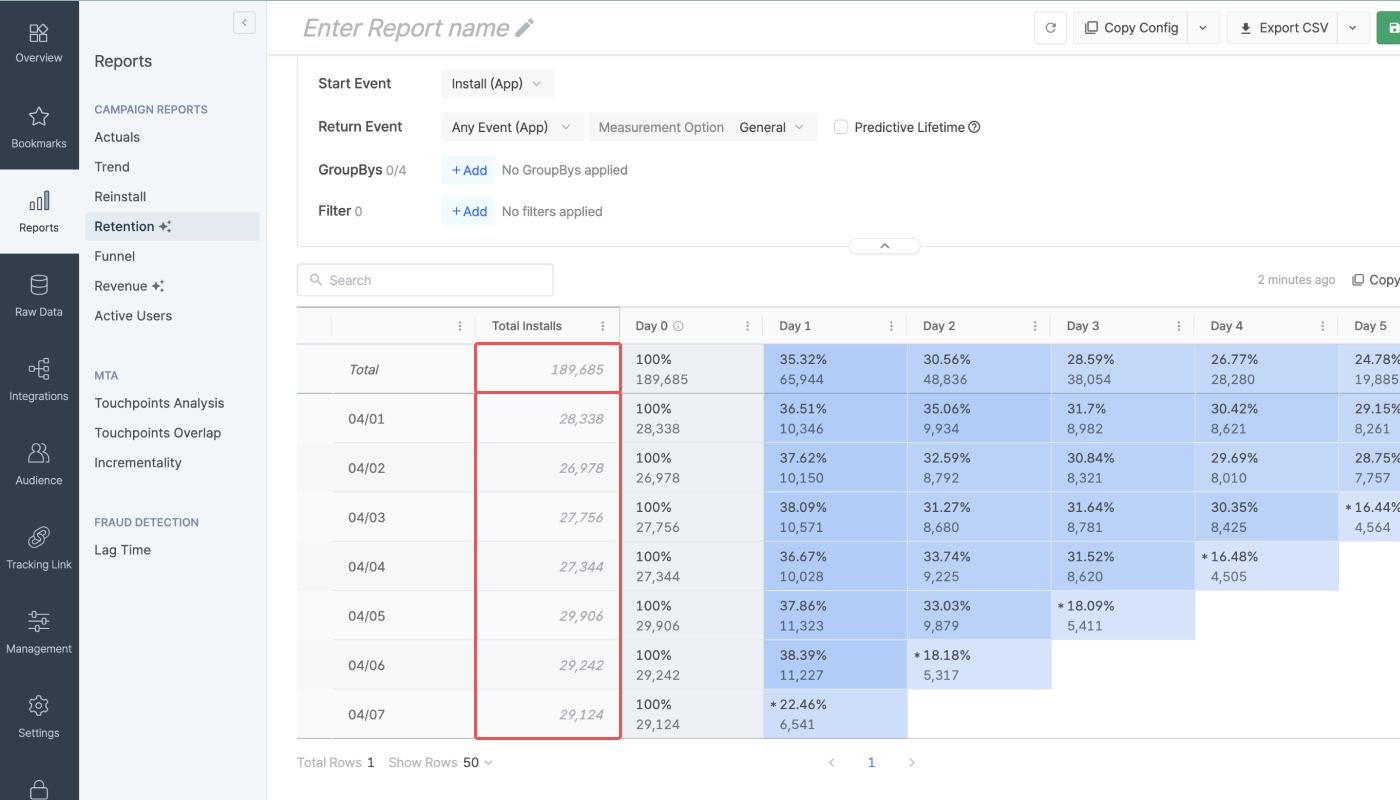

If the Return Events include all Start Events, the retention rate of Day 0, Week 0, Month 0, Hour 0, Minute 0 is displayed as 100%. This is because the Start Event is also considered as the Return event and counts the user who performed the Start Event as a returned user.

Refer to the example below.

Example

Let’s say 4 users performed the following events on 2024-04-01.

User A: Install (App), Subscribe (App)

User B: Deeplink Open (App), Subscribe (App)

User C: Add To Cart (App), Install (App)

User D: Add To Cart (App), Deeplink Open (App)

The Retention Report has been configured as follows.

Granularity and Date Range: Daily, From 2024-04-01 to 2024-04-05

Start Event: Install (App), Deeplink Open (App)

Return Event: Install (App), Deeplink Open (App), Sign-up (App), Order Complete (App)

The Install (App) and Deeplink Open (App) events are set as Start Event and also as Return Event. Because all users performed at least 1 of the 2 events, all users are considered to have performed a Start Event and also a Return Event. Therefore, the retention rate in Day 0 is displayed as 100.

The discrepancy is caused because the time periods for aggregating unique users differ.

The top row shows the unique users aggregated across the set date range. If a user performed multiple Start Events in different date buckets during the set date range, the number of retained users is counted as 1.

The sub-rows show the unique users for each date bucket only. If a user performed multiple Start Events in different date buckets during the set date range, that user is counted as a unique user for each date bucket. Therefore, the total number of retained users in the top row may be different than the sum of the number of retained users displayed in each sub-row. For more details on the identifiers used to identify unique users, refer to this article.

Refer to the example below.

Example

A user performed the following events.

Installed the app after clicking a Channel Z's video ad and deleted the app on 2024-01-01.

Installed the app again after clicking a Channel Z's banner ad on 2024-01-02.

When the granularity is set to Daily, the Retention Report will show the following result.

Total number of retained users in the top row for Channel Z (From 2024-01-01 to 2024-01-02): 1

Number of retained users in the sub-row (2024-01-01) for Channel Z: 1

Number of retained users in the sub-row (2024-01-02) for Channel Z: 1

The Return Event is aggregated based on the date the event occurred.

Let’s say an app install event occurred at 10:00 PM, 2024-01-01. This user performed the app open event at 11:00 PM, 2024-01-01, and again at 2:00 PM, 2024-01-02.

When setting the Start Event to Install and the Return Event to Any Event, the Retention Report will report the Return Event to have occurred on Day 0 and Day 1.

The number of retained users is calculated by calendar dates. Refer to the example below.

Example

A user performed the following events.

Installed the app at 8:00 PM on 2024-01-01

Opened the app at 10:00 PM on 2024-01-01

Opened the app at 8:00 AM on 2024-01-02

The Retention Report has been configured as follows.

Start Event: Installs (App)

Return Event: Opens (App)

The Retention Report will show the following result.

The number of retained users in Day 0 (2024-01-01): 1

The number of retained users in Day 1 (2024-01-01): 1

The app open event performed at 8:00 AM, 2024-01-02 occurred 12 hours after the app install which is less than 24 hours. However, the calendar date has changed and therefore the user is counted as a user who returned to the app in Day 1.

The following conditions must be met to enable or view the predictive lifetime metric in the Retention Report.

The granularity must be set to Daily. The start date of the the date range must be set to at least 3 days earlier than today.

The number of users of a cohort must be at least 30. When the number of users of a cohort falls short, “Insufficient User Count” will show instead of the predictive lifetime.

The predictive lifetime value (pLTV) can be calculated by multiplying the predictive lifetime with the ARPDAU. In the Airbridge dashboard, the pLTV can be monitored using the Revenue Report. Refer to this article for more information.

The User Count in the sub-rows is always smaller or equal to the total User Count. This is because the User Count in the sub-rows is the number of unique users aggregated during the specific time range the sub-row represents, whereas the total User Count in the top rows is the number of unique users aggregated during the entire configured date range. The same logic applies to Paying Users, which is a sub-metric.

Let's say a user interacted with your app as described below.

Day 0: Installed app after viewing an ad. Completed an order in the app and deleted the app afterward.

Day 1: Installed the app again after viewing an ad. Completed an order in the app.

In this case, the User Count and Paying Users on Day 0 and Day 1 will show as follows.

Total Users, Paying Users: 1

User Count and Paying Users on Day 0: 1 each

User Count and Paying Users on Day 1: 1 each

Yes, you can query both app and web data in the Active Users Report by selecting app metrics and web metrics when creating a report view.

In the Active Users Report, a unique user is determined by the identifier used for the Calculated by. For more details on the identifiers, refer to this article.

The Active Users Report aggregates unique users based on the set granularity, and therefore, the sum of the DAU for a month is different from the MAU for the same date range.

For example, let's say a user launched your app every day for 30 days. The Active User count is aggregated based on the set granularity.

Daily: Airbridge aggregates daily unique users. Therefore, the DAU is 1 for each day is 1, and the sum of the DAU is 30.

Monthly: Airbridge aggregates monthly unique users. Therefore, the MAU is 1.

Upon configuring GroupBys in the Active Users Report, the unique user count is reaggregated based on the set GroupBys. As a result, the sum of the items after applying the GroupBys may be larger than before.

For example, let's say a user engaged with an ad from channel A and channel B. When selecting Channel as a GroupBy, the user is counted as 1 active user for each channel. This may appear as 2 active users as a sum. However, when removing Channel as a GroupBy, only 1 user will be counted as an active user.

No, because when computing the average step time, the user data changes per step. However, you can create a new funnel to view the average time to convert between certain Steps.

For example, if the average step time of Step 3 is 10 minutes and the average step time of Step 4 is 20 minutes, it doesn’t mean that the average time to convert from Step 2 to Step 4 is 30 minutes. If you want to know the average time to convert from Step 2 to Step 4, you have to redesign the funnel so that Step 2 event and Step 4 event are set as Step 1 and Step 3 and look for the average conversion time.

If you have successfully set up the Funnel Report, Step 1 should always show a conversion rate of 100%. The reason other Steps show a conversion rate of less than 1% is that they simply have a low conversion rate. Try any of the solutions below and reload the report.

Delete Step 1.

Expand the Conversion Window. A sufficient amount of time may be required for conversions to happen.

Reconsider the user journey and the order of the Steps.

Yes, but the analysis result may change every time you import the report when the date range was set using the date operator “Last”. Reports with the funnel entrance date range set using “Between” or “Since” will always yield the same results. However, reports using “Last” as their date operator will show different results on different days. For example, if you save a report with the funnel entrance date range set to “Last 10 days,” the report will always show you the results from the last 10 days before importing the report.

このページは役に立ちましたか?