- Developers Guide

- Integration

- Third-party Platform Integration

Google Cloud Storage

Attention

The basics are similar to the AWS S3 integration, but the raw data dump target is Google Cloud Storage. The integration is not provided on the Airbridge dashboard. Please read the instructions below and contact your CSM accordingly.

To perform daily dumps of raw data provided by Airbridge (Tracking Link, Web, App) to Google Cloud Storage.

Go to "IAM & Admin" → "Service Accounts"

Click "+ Create Service Account" and create a new service account.

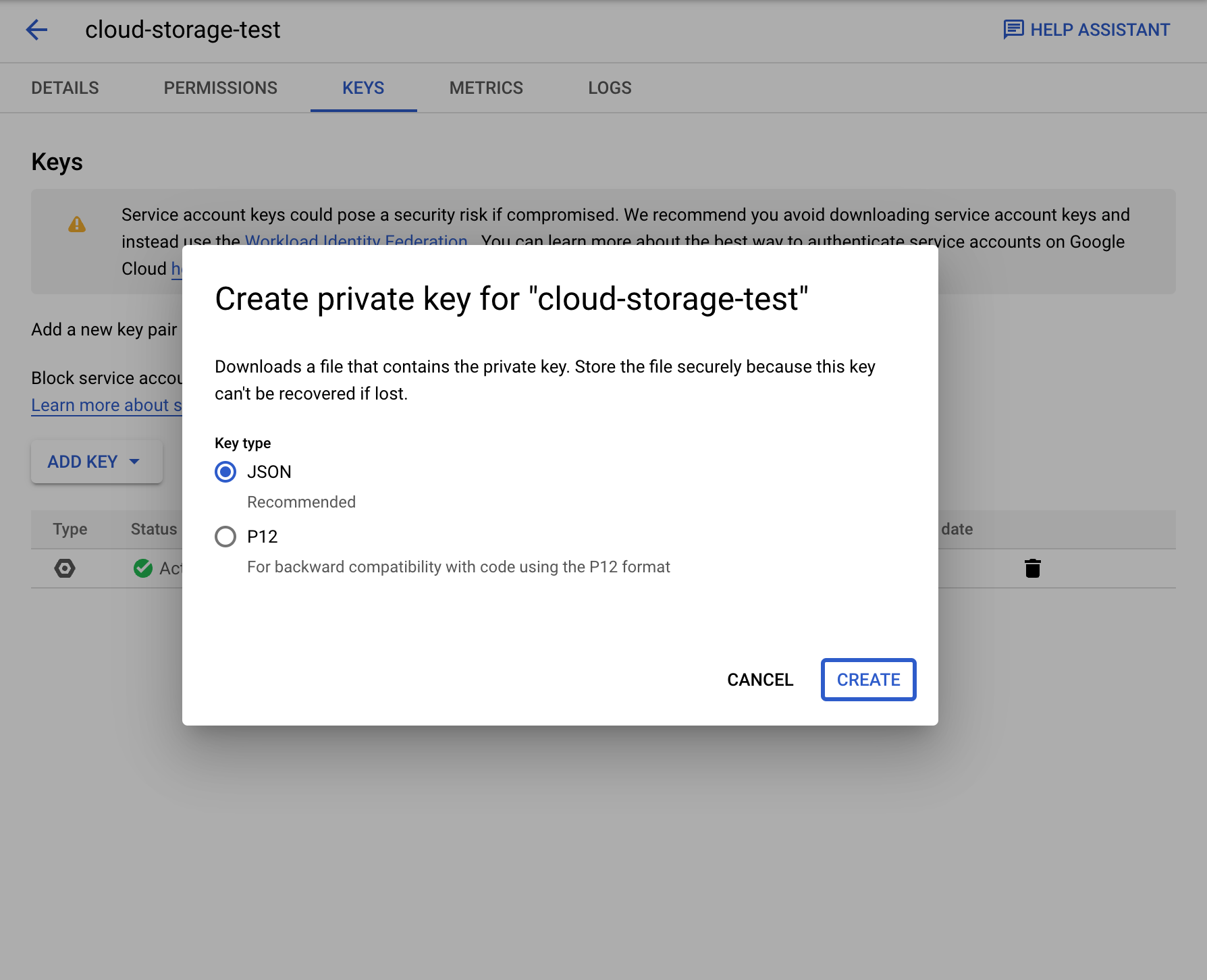

"Add Key" on menu "KEYS" and download the key of the newly created service account in JSON format.

Create a new bucket at the "Storage Browser" menu.

Click "+ Grant Access" in the "Permissions" menu for the newly created bucket.

Grant "Storage Object Admin" privileges to the client_email in the previously downloaded JSON file.

Please Input the following information to Airbridge Dashboard.

The

client_emailin your JSON file.The

private_keyin your JSON file.The name of the bucket.

Data will be dumped to the following paths.

Web events:

{bucket_name}/{app_name}/web/{version}/date={YYYY-MM-DD}/App events:

{bucket_name}/{app_name}/app/{version}/date={YYYY-MM-DD}/Tracking link events:

{bucket_name}/{app_name}/tracking-link/{version}/date={YYYY-MM-DD}/

Data will be dumped daily between 19:00 and 21:00 UTC.

Data will be dumped as files that do not exceed 128MB. Please make sure that several files will be loaded when accessing your bucket.

Raw data column information can be found at http://abit.ly/dataspec. The latest "Raw Data Export Dump Integration" version available on the date of integration will be applied.

There are cases where ".csv.gz" files are downloaded as ".csv" at the Google Cloud Storage console. If this is the case, change the ".csv" extension to ".csv.gz" and extract the file using commands such as

gunzip.

Was this helpful?